#Deisobutanizer pro ii simulation software#

Some recent studies try to match software with the trends, but in a limited scope or manner. The severity of the issues is expected to worsen as the two hardware trends continue. On multicore CPU, our studies show that traditional locality enhancement, for being oblivious to the new features of multicore memory hierarchy, may even cause large slowdown to data-intensive dynamic applications. On GPU, as our recent study shows and other studies echo, performance enhancement of a factor of integers is possible when memory accesses or control flows are streamlined for a set of GPU applications. Currently, the lack of support to these applications on modern CMP severely limits their performance. And meanwhile, as most real-world processes are non-uniform and evolving (e.g., the evolution of a galaxy or the process of a drug injection), both the computations and data accesses of these programs tend to be irregular and dynamically changing. For instance, many scientific simulations deal with a large volume of data. Unfortunately, both attributes present and will persist in a class of important applications. These two trends complicate the translation of computing power into performance, especially for a program with either intensive data accesses or complex patterns in data accesses or control flow paths.

On the recent IBM Power7 architecture, for instance, four hardware contexts (or SMT threads) in a core share the entire memory hierarchy, all cores in one chip share an on-chip 元 cache, and cores across chips share 元 and main memory through off-chip connections.

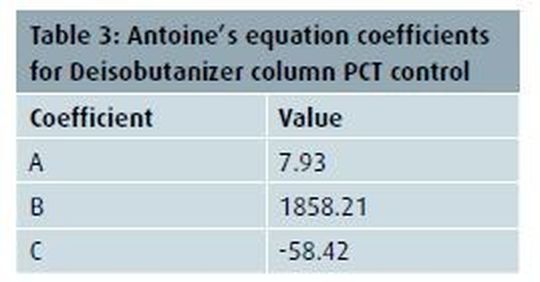

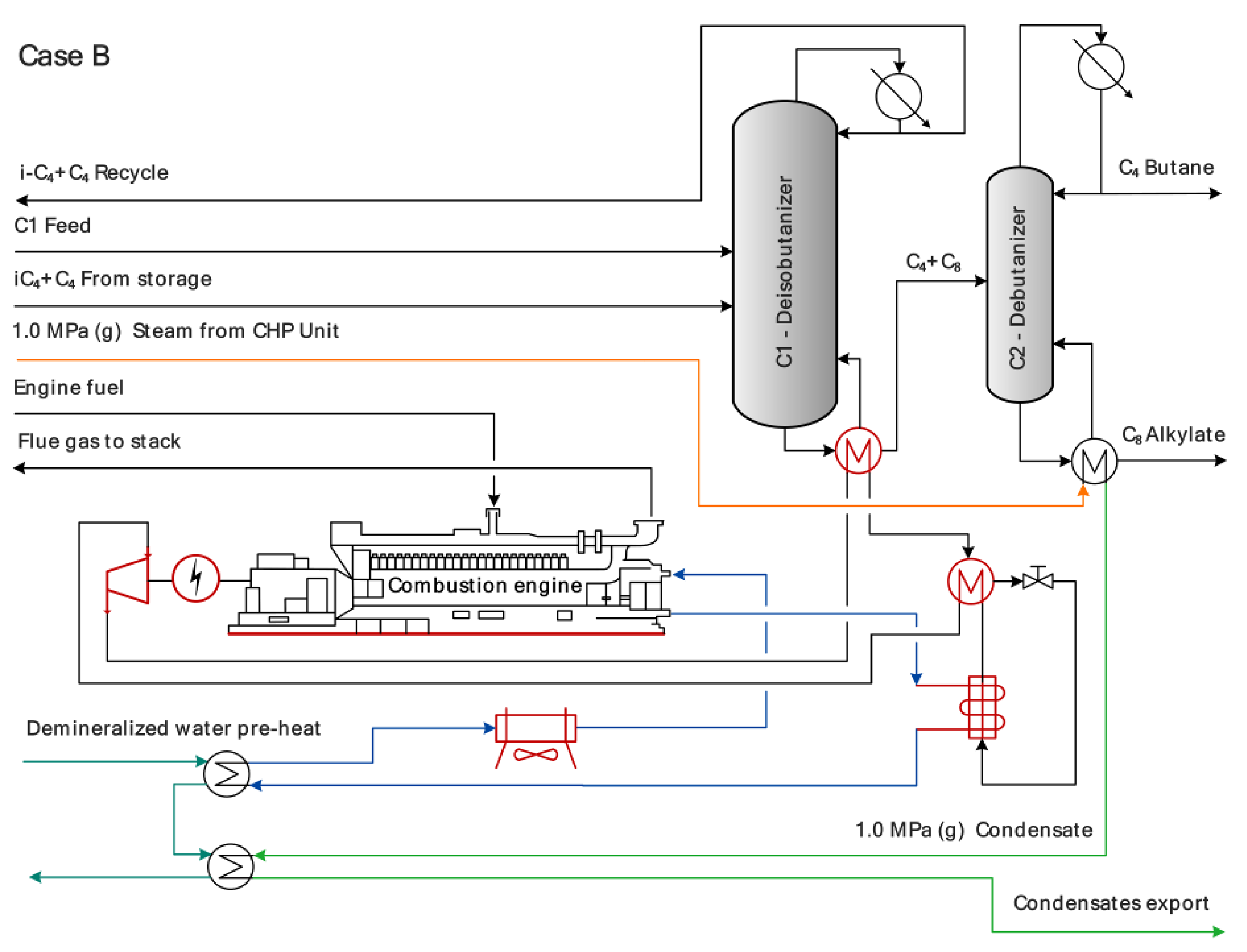

Bridging this gap is difficult the complexities of modern CMP memory hierarchy make it even harder: Data cache becomes shared among computing units, and the sharing is often non-uniform-whether two computing units share a cache depends on their proximity and the level of the cache. Consequently, data movement and storage is expected to consume more than 70% of the total system power. It is expected that by 2018, node concurrency in an exascale system will increase by hundreds of times, whereas, memory bandwidth will expand by only 10 to 20 times. Despite the capped growth of the peak CPU speed, the aggregate speed of a CMP keeps increasing as more cores get into a single chip. The second hardware more » trend is the growing gap between memory bandwidth and the aggregate speed-that is, the sum of all cores' computing power-of a Chip Multiprocessor (CMP). Some evidences are already shown on Graphic Processing Units (GPU): Irregular data accesses (e.g., indirect references A]) and conditional branches are limiting many GPU applications' performance at a level an order of magnitude lower than the peak of GPU. With more processors produced through a massive integration of simple cores, future systems will increasingly favor regular data-level parallel computations, but deviate from the needs of applications with complex patterns. The first is the increasing sensitivity of processors' throughput to irregularities in computation. The development of modern processors exhibits two trends that complicate the optimizations of modern software. This experience demonstrates that the use of advanced control technology allows faster implementation of better controls when dealing with a process that is poorly = , The techniques described in the paper are those which have been successfully applied in a real plant. It is based on work that has been done in Suncor's Sarnia Refinery, and on work that is in progress now. This paper deals with some of those tools. These tools must be usable in the environment that is normally found in an operating plant. Good control technology is required which will allow the control engineer to develop dynamic models of the plant, determine the best control strategies, and then implement and tune them. There is clearly a need for a good way to deal with these plant problems. When a set of controls are put in place, these same upsets and process fluctuations make testing and tuning the controls a very time consuming exercise. They make it hard to design the highly responsive yet stable controls that are needed. Plant upsets, some large, but most small, interfere with the step tests, and hamper efforts to produce good dynamic models of the plant.

0 kommentar(er)

0 kommentar(er)